一、准备工作

环境:Centos7.9

kubeadm 和二进制安装 k8s 适用场景分析

kubeadm 是官方提供的开源工具,是一个开源项目,用于快速搭建 kubernetes 集群,前是比较方便和推荐使用的。kubeadm init 以及 kubeadm join 这两个命令可以快速创建 kubernetes 集群。

Kubeadm 初始化 k8s,所有的组件都是以 pod 形式运行的,具备故障自恢复能力。

kubeadm 是工具,可以快速搭建集群,也就是相当于用程序脚本帮我们装好了集群,属于自动部 署,简化部署操作,自动部署屏蔽了很多细节,使得对各个模块感知很少,如果对 k8s 架构组件理解不 深的话,遇到问题比较难排查。

kubeadm 适合需要经常部署 k8s,或者对自动化要求比较高的场景下使

二、初始化安装 k8s 集群的实验环境

2.1、 修改机器 IP,变成静态 IP

vim /etc/sysconfig/network-scripts/ifcfg-ens33 文件

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static # static 表示静态地址

IPADDR=192.168.216.158

NETMASK=255.255.255.0 # 子网掩码

GATEWAY=192.168.216.2 # 网关

DNS1=192.168.216.2 # DNS 服务器

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

DEVICE=ens33

ONBOOT=yes· 修改配置文件之后需要重启网络服务才能使配置生效,重启网络服务命令如下:

systemctl restart network2.2、配置主机名

hostnamectl set-hostname k8s-master1 && bash

hostnamectl set-hostname k8s-master2 && bash

hostnamectl set-hostname k8s-node && bash2.3、 配置主机 hosts 文件,相互之间通过主机名互相访问

修改每台机器的/etc/hosts 文件,增加如下三行:

[root@k8s-master1 test]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.216.158 k8s-master1

192.168.216.159 k8s-master2

192.168.216.160 k8s-node2.4、 配置主机之间无密码登录

[root@k8s-master1 ~]# ssh-keygen

[root@k8s-master1 ~]# ssh-copy-id k8s-master1

[root@k8s-master1 ~]# ssh-copy-id k8s-master2

[root@k8s-master1 ~]# ssh-copy-id k8s-node

其他两个节点也可以按照ssh-keygen实现免密登录2.5、 关闭交换分区 swap,提升性能

[root@k8s-master1 ~]# swapoff -a # 临时关闭交换分区

[root@k8s-master1 ~]# vim /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

永久关闭交换分区

验证

[root@k8s-master1 ~]# free -m

total used free shared buff/cache available

Mem: 7802 1215 5375 13 1211 6337

Swap: 0 0 02.6、 修改机器内核参数

[root@k8s-master1 ~]# modprobe br_netfilter

[root@k8s-master1 ~]# echo "modprobe br_netfilter" >> /etc/profile

[root@k8s-master1 ~]# cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

[root@k8s-master1 ~]# sysctl -p /etc/sysctl.d/k8s.conf

其余节点一样,都需要需要内核参数

2.6.1、为什么要执行modprode br_netfilter?

修改/etc/sysctl.d/k8s.conf 文件,增加如下三行参数:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

sysctl -p /etc/sysctl.d/k8s.conf 出现报错:

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or

directory

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or

directory

解决方法:

modprobe br_netfilter

2.6.2、sysctl 是做什么的?

在运行时配置内核参数

-p 从指定的文件加载系统参数,如不指定即从/etc/sysctl.conf 中加载

2.6.3、为什么开启 net.bridge.bridge-nf-call-iptables 内核参数?

在 centos 下安装 docker,执行 docker info 出现如下警告:

WARNING: bridge-nf-call-iptables is disabled

WARNING: bridge-nf-call-ip6tables is disabled

解决办法:

vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

2.6.4、为什么要开启 net.ipv4.ip_forward = 1 参数?

kubeadm 初始化 k8s 如果报错:

就表示没有开启 ip_forward,需要开启。

2.7、关闭防火墙

[root@k8s-master1 ~]# systemctl stop firewalld && systemctl disable frewalld

[root@k8s-master2 ~]# systemctl stop firewalld && systemctl disable firewalld

[root@k8s-node ~]# systemctl stop firewalld && systemctl disable firewalld2.8、关闭selinux

[root@k8s-master1 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g /etc/selinux/config

[root@k8s-master2 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g /etc/selinux/config

[root@k8s-node ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g /etc/selinux/config2.9、 配置阿里云的 repo 源

[root@k8s-master1 ~]# cd /etc/yum.repos.d/

[root@k8s-master1 yum.repos.d]# mkdir Centos-repo

[root@k8s-master1 yum.repos.d]# mv ./* Centos-repo

[root@k8s-master1 yum.repos.d]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

[root@k8s-master2 ~]# cd /etc/yum.repos.d/

[root@k8s-master2 yum.repos.d]# mkdir Centos-repo

[root@k8s-master2 yum.repos.d]# mv ./* Centos-repo

[root@k8s-master2 yum.repos.d]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

[root@k8s-node ~]# cd /etc/yum.repos.d/

[root@k8s-node yum.repos.d]# mkdir Centos-repo

[root@k8s-node yum.repos.d]# mv ./* Centos-repo

[root@k8s-node yum.repos.d]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

2.9.1、配置国阿里云 docker 的 repo 源

[root@k8s-master1 ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s-master2 ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s-node ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo2.9.2、配置安装k8s组件需要的阿里云的 repo 源

[root@k8s-master1 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@k8s-master2 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@k8s-node ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF2.9.3、 配置时间同步

#安装 ntpdate 命令

[root@k8s-master1 ~]# yum -y install ntpdate

[root@k8s-master1 ~]# ntpdate cn.pool.ntp.org

[root@k8s-master1 ~]# crontab -a

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org

[root@k8s-master2 ~]# yum -y install ntpdate

[root@k8s-master2 ~]# ntpdate cn.pool.ntp.org

[root@k8s-master2 ~]# crontab -a

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org

[root@k8s-node ~]# yum -y install ntpdate

[root@k8s-node ~]# ntpdate cn.pool.ntp.org

[root@k8s-node ~]# crontab -a

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org2.9.4、加载ipvs相关内核模块

[root@k8s-master1 ~]# # cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in ${ipvs_modules}; do

/sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1

if [ 0 -eq 0 ]; then

/sbin/modprobe ${kernel_module}

fi

done

EOF

[root@k8s-master1 ~]# scp /etc/sysconfig/modules/ipvs.modules k8s-master2:/etc/sysconfig/modules/

[root@k8s-node ~]# scp /etc/sysconfig/modules/ipvs.modules k8s-master2:/etc/sysconfig/授权、运行、检查是否加载成功:

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs问题 1:ipvs 是什么?

ipvs (IP Virtual Server) 实现了传输层负载均衡,也就是我们常说的 4 层 LAN 交换,作为 Linux

内核的一部分。ipvs 运行在主机上,在真实服务器集群前充当负载均衡器。ipvs 可以将基于 TCP 和 UDP

的服务请求转发到真实服务器上,并使真实服务器的服务在单个 IP 地址上显示为虚拟服务。

问题 2:ipvs 和 iptable 对比分析

kube-proxy 支持 iptables 和 ipvs 两种模式, 在 kubernetes v1.8 中引入了 ipvs 模式,在

v1.9 中处于 beta 阶段,在 v1.11 中已经正式可用了。iptables 模式在 v1.1 中就添加支持了,从

v1.2 版本开始 iptables 就是 kube-proxy 默认的操作模式,ipvs 和 iptables 都是基于 netfilter

的,但是 ipvs 采用的是 hash 表,因此当 service 数量达到一定规模时,hash 查表的速度优势就会显现

出来,从而提高 service 的服务性能。那么 ipvs 模式和 iptables 模式之间有哪些差异呢?

1、ipvs 为大型集群提供了更好的可扩展性和性能

2、ipvs 支持比 iptables 更复杂的复制均衡算法(最小负载、最少连接、加权等等)

3、ipvs 支持服务器健康检查和连接重试等功能

2.9.5、安装基础软件包

[root@k8s-master1 ~]# yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git iproute lrzsz bash-completion tree bridge-utils unzip bind-utils gcc

[root@k8s-master2 ~]# yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git iproute lrzsz bash-completion tree bridge-utils unzip bind-utils gcc

[root@k8s-node ~]# yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git iproute lrzsz bash-completion tree bridge-utils unzip bind-utils gcc三、安装 docker 服务

3.1、安装 docker-ce

[root@k8s-master1 ~]# yum install docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io -y

[root@k8s-master2 ~]# yum install docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io -y

[root@k8s-node ~]# yum install docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io -y

[root@k8s-master1 ~]# systemctl start docker && systemctl enable docker && systemctl status docker

[root@k8s-master2 ~]# systemctl start docker && systemctl enable docker && systemctl status docker

[root@k8s-node ~]# systemctl start docker && systemctl enable docker && systemctl status docker3.2、配置 docker 镜像加速器驱动

[root@k8s-master1 ~]# vim /etc/docker/daemon.json

{

"registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.dockercn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hubmirror.c.163.com","http://qtid6917.mirror.aliyuncs.com",

"https://rncxm540.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

[root@k8s-master2 ~]# vim /etc/docker/daemon.json

{

"registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.dockercn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hubmirror.c.163.com","http://qtid6917.mirror.aliyuncs.com",

"https://rncxm540.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

[root@k8s-node ~]# vim /etc/docker/daemon.json

{

"registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.dockercn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hubmirror.c.163.com","http://qtid6917.mirror.aliyuncs.com",

"https://rncxm540.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]· 修改 docker 文件驱动为 systemd,默认为 cgroupfs,kubelet 默认使用 systemd,两者必须一致才可以。

· 重新加载并启动docker,查看docker状态

[root@k8s-master1 ~]# systemctl daemon-reload && systemctl restart docker

[root@k8s-master1 ~]# systemctl status docker四、安装初始化 k8s 需要的软件包

[root@k8s-master1 ~]# yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6

[root@k8s-master1 ~]# systemctl enable kubelet && systemctl start kubelet

[root@k8s-master1 ~]# systemctl status kubelet

[root@k8s-master2 ~]# yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6

[root@k8s-master2 ~]# systemctl enable kubelet && systemctl start kubelet

[root@k8s-master2 ~]# systemctl status kubelet

[root@k8s-node ~]# yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6

[root@k8s-node ~]# systemctl enable kubelet && systemctl start kubelet

[root@k8s-node ~]# systemctl status kubelet· 上面可以看到 kubelet 状态不是 running 状态,这个是正常的,不用管,等 k8s 组件起来这个kubelet 就正常了。 -------缺少截图,后边添加

注:每个软件包的作用

Kubeadm: kubeadm 是一个工具,用来初始化 k8s 集群的

kubelet: 安装在集群所有节点上,用于启动 Pod 的

kubectl: 通过 kubectl 可以部署和管理应用,查看各种资源,创建、删除和更新各种组件

五、通过 keepalive+nginx 实现 k8s apiserver 节点高可用

5.1、安装 nginx 主备

k8s-master1、k8s-master2

[root@k8s-master1 ~]# yum install nginx keepalived -y

[root@k8s-master2 ~]# yum install nginx keepalived -y5.2、修改 nginx 配置文件。主备一样

主 nginx

[root@k8s-master1 ~]# vim /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

# 四层负载均衡,为两台 Master apiserver 组件提供负载均衡

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status

$upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.40.180:6443; # Master1 APISERVER IP:PORT

server 192.168.40.181:6443; # Master2 APISERVER IP:PORT

}

server {

listen 16443; # 由于 nginx 与 master 节点复用,这个监听端口不能是 6443,否则会冲突

proxy_pass k8s-apiserver;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

server {

listen 80 default_server;

server_name _;

location / {

}

}

}备 nginx

[root@k8s-maste2 ~]# vim /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

# 四层负载均衡,为两台 Master apiserver 组件提供负载均衡

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status

$upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.40.180:6443; # Master1 APISERVER IP:PORT

server 192.168.40.181:6443; # Master2 APISERVER IP:PORT

}

server {

listen 16443; # 由于 nginx 与 master 节点复用,这个监听端口不能是 6443,否则会冲突

proxy_pass k8s-apiserver;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

server {

listen 80 default_server;

server_name _;

location / {

}

}

}5.3、安装 keepalived 并配置

主 keepalived

[root@k8s-master1 ~]# vim /etc/keepalived/keepalived.conf

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID 实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定 VRRP 心跳包通告间隔时间,默认 1 秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟 IP

virtual_ipaddress {

192.168.40.199/24

}

track_script {

check_nginx

}

}备 keepalived

[root@k8s-master1 ~]# vim /etc/keepalived/keepalived.conf

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface ens33 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID 实例,每个实例是唯一的

priority 90 # 优先级,备服务器设置 90

advert_int 1 # 指定 VRRP 心跳包通告间隔时间,默认 1 秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟 IP

virtual_ipaddress {

192.168.40.199/24

}

track_script {

check_nginx

}

}#vrrp_script:指定检查 nginx 工作状态脚本(根据 nginx 状态判断是否故障转移)

#virtual_ipaddress:虚拟 IP(VIP)

5.4、主备配置 nginx 进程检测脚本

[root@k8s-master1 ~]# vim /etc/keepalived/check_nginx.sh

#!/bin/bash

count=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

[root@k8s-master1 ~]# chmod +x /etc/keepalived/check_nginx.sh[root@k8s-master2 ~]# vim /etc/keepalived/check_nginx.sh

#!/bin/bash

count=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

[root@k8s-master2 ~]# chmod +x /etc/keepalived/check_nginx.sh5.5、启动服务

[root@k8s-master1 ~]# systemctl daemon-reload

[root@k8s-master1 ~]# systemctl start nginx

[root@k8s-master1 ~]# systemctl start keepalived

[root@k8s-master1 ~]# systemctl enable nginx keepalived

[root@k8s-master1 ~]# systemctl status keepalived[root@k8s-master2 ~]# systemctl daemon-reload

[root@k8s-master2 ~]# systemctl start nginx

[root@k8s-master2 ~]# systemctl start keepalived

[root@k8s-master2 ~]# systemctl enable nginx keepalived

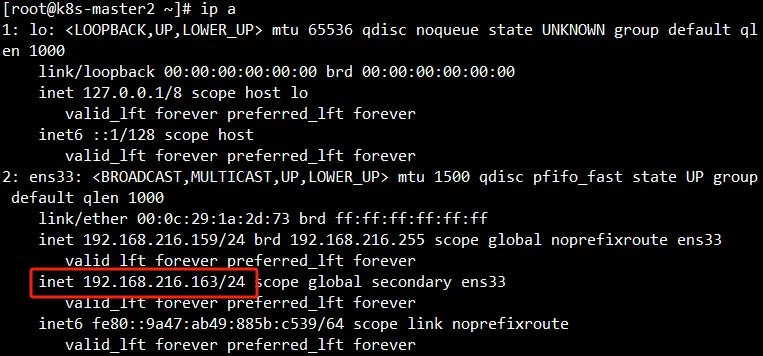

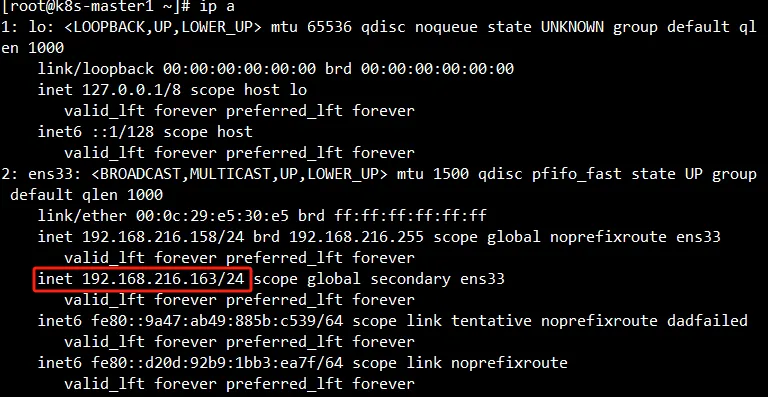

[root@k8s-master2 ~]# systemctl status keepalive5.6、测试 vip 是否绑定成功

[root@k8s-master1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:e5:30:e5 brd ff:ff:ff:ff:ff:ff

inet 192.168.216.158/24 brd 192.168.216.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.216.163/24 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::9a47:ab49:885b:c539/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::d20d:92b9:1bb3:ea7f/64 scope link noprefixroute

valid_lft forever preferred_lft forever

5.7、测试 keepalived

停掉 k8s-master1 上的 nginx。Vip 会漂移到 k8s-master2

[root@k8s-master1 ~]# systemctl stop

[root@k8s-master1 ~]# ip addr

#启动 k8s-master1 上的 nginx 和 keepalived,vip 又会漂移回来

[root@xianchaomaster1 ~]# systemctl daemon-reload

[root@xianchaomaster1 ~]# systemctl start nginx

[root@xianchaomaster1 ~]# systemctl start keepalived

[root@xianchaomaster1]# ip addr

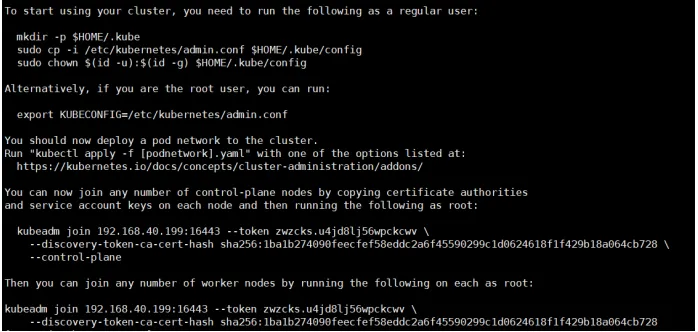

六、初始化 k8s 集群

#使用 kubeadm 初始化 k8s 集群

#把初始化 k8s 集群需要的离线镜像包上传到 k8s-master1、k8s-master2、k8s-node 机器上,手动解压:

[root@k8s-master1 ~]# docker load -i k8simage-1-20-6.tar.gz

[root@k8s-master2 ~]# docker load -i k8simage-1-20-6.tar.gz

[root@k8s-node ~]# docker load -i k8simage-1-20-6.tar.gz

[root@k8s-master1]# kubeadm init --config kubeadm-config.yaml 第二种方式获取镜像

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.22.0 k8s.gcr.io/kube-controller-manager:v1.22.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.22.0 k8s.gcr.io/kube-proxy:v1.22.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.22.0 k8s.gcr.io/kube-apiserver:v1.22.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.22.0 k8s.gcr.io/kube-scheduler:v1.22.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.4 k8s.gcr.io/coredns/coredns:v1.8.4

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.0-0 k8s.gcr.io/etcd:3.5.0-0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.5 k8s.gcr.io/pause:3.5 显示如下,表示初始化成功

kubeadm join 192.168.40.199:16443 --token zwzcks.u4jd8lj56wpckcwv \

--discovery-token-ca-cert-hash

sha256:1ba1b274090feecfef58eddc2a6f45590299c1d0624618f1f429b18a064cb728 \

--control-plane

#上面命令是把 master 节点加入集群,需要保存下来,每个人的都不一样

kubeadm join 192.168.40.199:16443 --token zwzcks.u4jd8lj56wpckcwv \

--discovery-token-ca-cert-hash

sha256:1ba1b274090feecfef58eddc2a6f45590299c1d0624618f1f429b18a064cb728

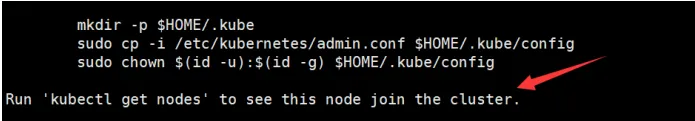

#上面命令是把 node 节点加入集群,需要保存下来,每个人的都不一样6.1、根据提示消息,在Master节点上使用kubectl工具

[root@k8s-master1 ~]# mkdir -p $HOME/.kube

[root@k8s-master1 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master1 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config6.2、扩容 k8s 集群-添加 master 节点

#把 k8s-master1 节点的证书拷贝到 k8s-master2 上

在 k8s-master2 创建证书存放目录:

[root@k8s-master2 ~]# cd /root && mkdir -p /etc/kubernetes/pki/etcd &&mkdir -p ~/.kube/

#把 xianchaomaster1 节点的证书拷贝到 xianchaomaster2 上:

[root@k8s-master1 ~]# scp /etc/kubernetes/pki/ca.crt k8s-master2:/etc/kubernetes/pki/

[root@k8s-master1 ~]# scp /etc/kubernetes/pki/ca.key k8s-master2:/etc/kubernetes/pki/

[root@k8s-master1 ~]# scp /etc/kubernetes/pki/sa.key k8s-master2:/etc/kubernetes/pki/

[root@k8s-master1 ~]# scp /etc/kubernetes/pki/sa.pub k8s-master2:/etc/kubernetes/pki/

[root@k8s-master1 ~]# scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-master2:/etc:/etc/kubernetes/pki/

[root@k8s-master1 ~]# scp /etc/kubernetes/pki/front-proxy-ca.key k8s-master2:/etc:/etc/kubernetes/pki/

[root@k8s-master1 ~]# scp /etc/kubernetes/pki/etcd/ca.crt k8s-master2:/etc:/etc/kubernetes/pki/etcd/

[root@k8s-master1 ~]# scp /etc/kubernetes/pki/etcd/ca.key k8s-master2:/etc:/etc/kubernetes/pki/etcd/6.2.1、在 k8s-master1 上查看加入节点的命令:

[root@k8s-master1 ~]# kubeadm token create --print-join-command6.2.2、接着在k8s-master2上添加k8s-master1的执行结果

在 k8s-master1 上查看加入节点的命令:

[root@k8s-master1 ~]# kubeadm token create --print-join-command

#显示如下:

kubeadm join 192.168.40.199:16443 --token y23a82.hurmcpzedblv34q8 --discoverytoken-ca-cert-hash sha256:1ba1b274090feecfef58eddc2a6f45590299c1d0624618f1f429b18a064cb728

把k8s-master2加入集群

[root@k8s-master1 ~]#kubeadm join 192.168.40.199:16443 --token

zwzcks.u4jd8lj56wpckcwv \

--discovery-token-ca-cert-hash

sha256:1ba1b274090feecfef58eddc2a6f45590299c1d0624618f1f429b18a064cb728 \

--control-plane --ignore-preflight-errors=SystemVerification

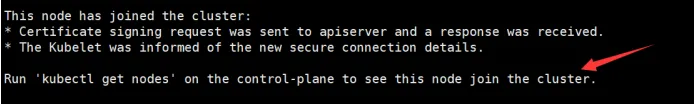

#看到上面说明 k8s-master2 节点已经加入到集群了

6.2.3、在 k8s-master1 上查看集群状况

[root@k8s-master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

xianchaomaster1 NotReady control-plane,master 49m v1.20.6

xianchaomaster2 NotReady control-plane,master 39s v1.20.67、扩容 k8s 集群-添加 node 节点

在 k8s-master1 上查看加入节点的命令:

[root@k8s-master1 ~]# kubeadm token create --print-join-command

#显示如下:

kubeadm join 192.168.40.199:16443 --token y23a82.hurmcpzedblv34q8 --discoverytoken-ca-cert-hash sha256:1ba1b274090feecfef58eddc2a6f45590299c1d0624618f1f429b18a064cb728

把 k8s-node 加入 k8s 集群:

[root@k8s-node~]# kubeadm token create --print-join-command

kubeadm join 192.168.40.199:16443 --token y23a82.hurmcpzedblv34q8 --discoverytoken-ca-cert-hash sha256:1ba1b274090feecfef58eddc2a6f45590299c1d0624618f1f429b18a064cb728

--ignore-preflight-errors=SystemVerification

#看到上面说明 k8s-node 节点已经加入到集群了,充当工作节点

7.1、在 k8s-master1 上查看集群节点状况

[root@k8s-master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

xianchaomaster1 NotReady control-plane,master 49m v1.20.6

xianchaomaster2 NotReady control-plane,master 39s v1.20.6

xianchaonode1 NotReady <none> 59s v1.20.6· 可以看到 k8s-master1 的 ROLES 角色为空,<none>就表示这个节点是工作节点。

· 可以把 k8s-master1 的 ROLES 变成 work,按照如下方法:

[root@k8s-master1 ~]# kubectl label node k8s-node1 node-role.kubernetes.io/worker=worker注意:上面状态都是 NotReady 状态,说明没有安装网络插件

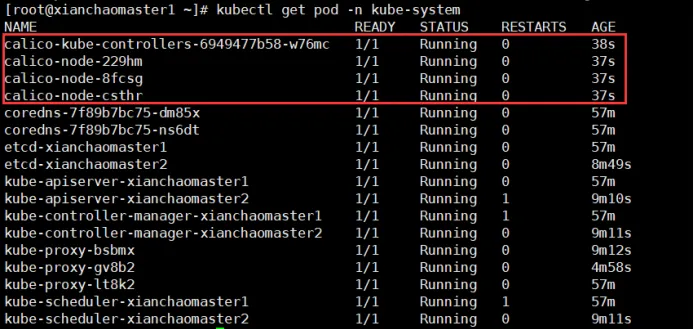

· 查看pod运行状态

[root@xianchaomaster1 ~]# kubectl get pods -n kube-system # -n 指定命名空间

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-lh28j 0/1 Pending 0 18h

coredns-7f89b7bc75-p7nhj 0/1 Pending 0 18h

etcd-xianchaomaster1 1/1 Running 0 18h

etcd-xianchaomaster2 1/1 Running 0 15m

kube-apiserver-xianchaomaster1 1/1 Running 0 18h

kube-apiserver-xianchaomaster2 1/1 Running 0 15m

kube-controller-manager-xianchaomaster1 1/1 Running 1 18h

kube-controller-manager-xianchaomaster2 1/1 Running 0 15m

kube-proxy-n26mf 1/1 Running 0 4m33s

kube-proxy-sddbv 1/1 Running 0 18h

kube-proxy-sgqm2 1/1 Running 0 15m

kube-scheduler-xianchaomaster1 1/1 Running 1 18h

kube-scheduler-xianchaomaster2 1/1 Running 0 15m8、安装 kubernetes 网络组件-Calico

· 上传 calico.yaml 到 xianchaomaster1 上,使用 yaml 文件安装 calico 网络插件 。

· 离线报没办法上传,可以使用这个

url -L https://docs.projectcalico.org/v3.22/manifests/calico.yaml -O8.1、运行 calico.yaml 文件

[root@master1 ~]# kubectl apply -f calico.yaml

· coredns-这个 pod 现在是 running 状态,运行正常

8.2、 再次查看集群状态。

[root@k8s-master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready control-plane,master 3d7h v1.20.6

k8s-master2 Ready control-plane,master 3d7h v1.20.6

k8s-node Ready worker 3d7h v1.20.6· STATUS 状态是 Ready,说明 k8s 集群正常运行了